How We Implemented Large Language Models (LLMs) in the 'Break Into Data' Server in 3 Simple Steps

Learn How To Integrate OpenAI's LLM to Parse, Organize, and Store Discord Messages in A Database.

Before we get into the nitty-gritty of this project let’s explore how users interact within our Discord Server.

Our community “Break into Data” has an accountability system where you can commit your goals. And our Bot will keep track of your daily submissions according to your goals.

We have 5 categories you can choose from:

Coding

Studying

Meditation

Fitness

Content Creation

After you commit your goals, you can start submitting daily by attaching a screenshot as a proof.

At the end of the week we aggregate how many days you were able to submit and place you on a global leaderboard!

We use the leaderboard during our weekly calls to review our progress and keep each other accountable.

If you need accountability buddies or job search resources in Data related fields, check out the server - here!

The Problem:

As more people started joining the “Break Into Data” Community, we realized we are missing something important.

Our old system didn’t track more granular metrics such as: hours of studying, number of leetcode challenges solved, etc.

In the previous version we simply required users to submit a proof in a form of screenshot. And that would count as a submission for that day.

See the function below:

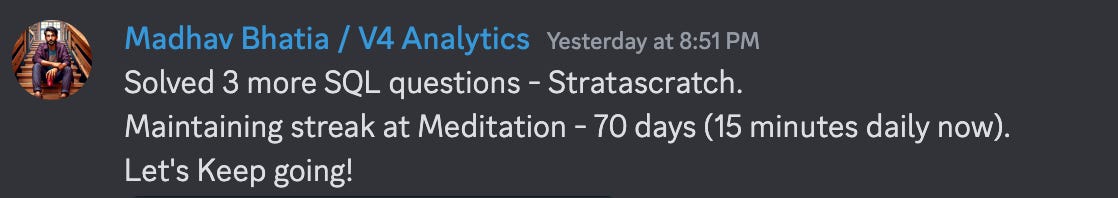

Let’s take an example of a submission below.

You can see from the screenshot below, Madhav submitted 2 goals: SQL questions and meditation. However, old system would take it as one entry for that day. Because he attached only one picture. ( I cropped it to save space here )

So we decided to do something about it…

Possible Solutions

We considered two options to resolve our issue:

1. Create a form where user could input all the data.

Pros: We would get a predictable structure for submissions.

Cons: The information would be only visible to the user, when the entire purpose of the submissions channel is to inspire for action and engage users with each other. Also filling out forms is boring. 🙄

2. Integrate LLM and let it parse the messages into data entries

Pros: This would allow people to chat naturally, increase engagement and save all of the metrics into our database.

Cons: It would cost us money and it won’t be accurate 100% of the time.

LLM INTEGRATION

All of our contributors agreed that LLM was the best route.

We rolled up our sleeves and began to integrate our first LLM use case. Exciting!🤩

Most importantly, we were able to do this because, our team consisted of people with complementing skillset which allowed fast delivery of the integration.

Thank you to our amazing contributors!

Our team:

Product Owner - Sets up the requirement of the project and maintainer of the Open Source Project - Meri Nova.

Data scientist - Responsible for ML POC, software engineering and deployment. Kostya Cholak.

Python developer - Integrated Unit Tests. Mishahal Palakuniyil

User testers - Separate thank you to Kelly Adams, Sunita, Madhav Batia, Mert Bozkir for testing and enduring bugs and messages for the entire week. 🤗

Integration:

Here is an overview of the entire LLM integration workflow.

We divided the entire process in 3 steps:

Step 1: Check whether user has submitted goals previously.

We need this because every submission_id has to have a goal_id that corresponds to a category_id to populate the tables correctly.

Categories are preset in our dimensions “Categories” table. Currently, we have the following global categories; fitness, coding, studying, meditation and content creation as our global categories.

Step 2: Make an OpenAI API request to return a CSV file.

In our prompt we include user’s message along with their preset goals and categories.

We chose CSV output over JSON for its structured format that will allow us less variability in LLM’s output.

PROMPT = """You will be given a user's message and you task is to you extract all the metrics out of this and return as CSV? Only output CSV, no thoughts

We need to extract this data:

Make sure to use this schema:

<day shift>, <category>, <value>

Day shift is 0 for today's submission, -1 for yesterday's submission and so on.

If no time is mentioned, then it is 0.

Only provide metrics from the user's goals, ignore others.

Possible categories:

{categories}

Only output data that matches the categories (and the "specifically" part if present).

If the user did not specify the value, but the category is mentioned as completed, then the value is "true" (meaning that the user completed the goal, but the value is unknown).

If the user says that they did not complete the goal, then the value is "false".

"""Step 3: Once we get the CSV we will parse it into structured data entry.

async def process_submission_message method is the main function that orchestrates the entire workflow and populates submissions table. See below.

End Result

After rigorous testing and prompt engineering our bot was able to output consistent results into our database.

In the image below you can find a successful example submission with this integration.

Our bot saved Kelly’s message “Studied Japanese for 20 minutes today” as a data entry with category :“Studying” and metric: “20 min”.

Make sure to check out the entire code base and ⭐️ star ⭐️ our Github Repo! If you are interested in contributing check our Github issues page.

Conclusion

We are excited integrate more features using LLM and other cutting-edge open source technologies and continuously share our progress.

If you are looking to collaborate with us join our active Discord server “ Break Into Data” where you can find other contributors and commit to your own goals with the support of the Data Community!

Great insights on how to strategically implement LLMs! What other technology are you planning to implement other than LLMs?

This is awesome! This bot is keeping me dope! 😎